Sharp-NeRF: Grid-based Fast Deblurring

Neural Radiance Fields Using Sharpness Prior

WACV 2024

Byeonghyeon Lee1*, Howoong Lee1,2*, Usman Ali1, and Eunbyung Park1

1Sungkyunkwan University, 2Hanwha Vision

*Equal contribution

Abstract

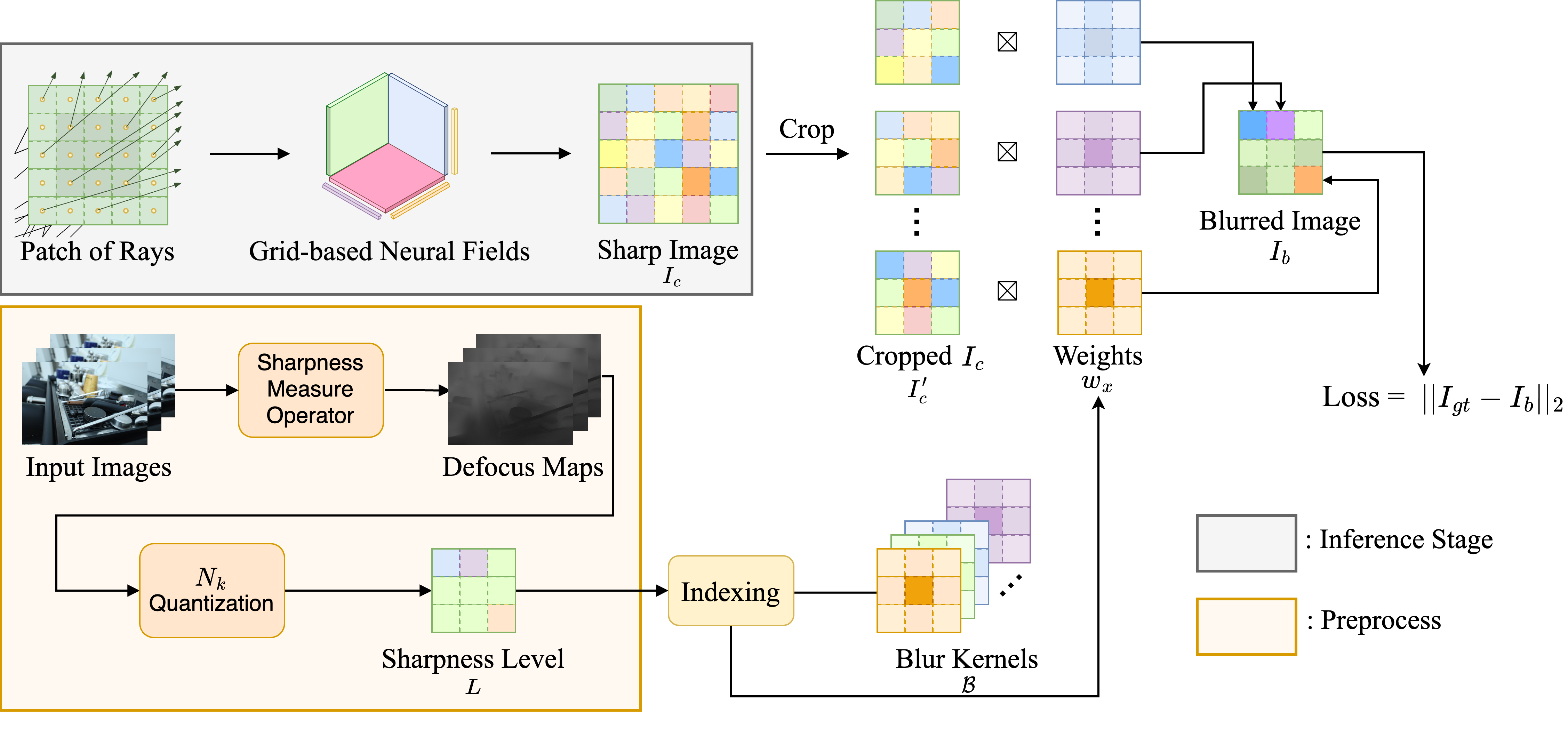

Neural Radiance Fields (NeRF) has shown its remarkable performance in neural rendering-based novel view synthesis. However, NeRF suffers from severe visual quality degradation when the input images have been captured under imperfect conditions, such as poor illumination, defocus blurring and lens aberrations. Especially, defocus blur is quite common in the images when they are normally captured using cameras. Although few recent studies have proposed to render sharp images of considerably high-quality, yet they still face many key challenges. In particular, those methods have employed a Multi-Layer Perceptron (MLP) based NeRF which requires tremendous computational time. To overcome these shortcomings, this paper proposes a novel technique Sharp-NeRF---a grid-based NeRF that renders clean and sharp images from the input blurry images within a half an hour training. To do so, we used several grid-based kernels to accurately model the sharpness/blurriness of the scene. The sharpness level of the pixels is computed to learn the spatially varying blur kernels. We have conducted experiments on the benchmarks consisting of blurry images and have evaluated full-reference and non-reference metrics. The qualitative and quantitative results have revealed that our approach renders the sharp novel views with vivid colors and fine details, and it has considerably faster training time than the previous works.

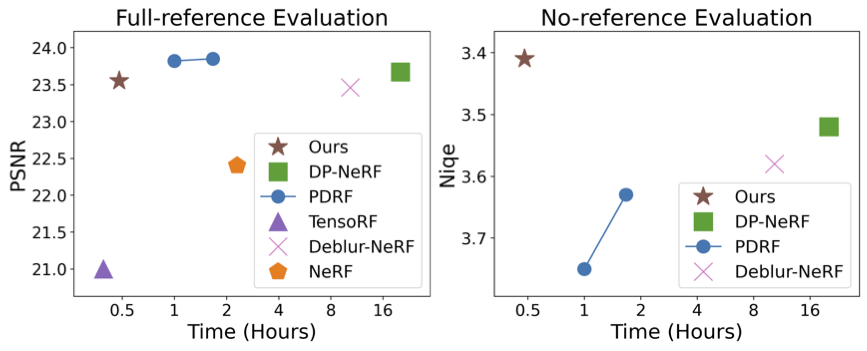

Comparison in terms of training time and image qual- ity on the real defocus dataset. Left: Evaluated under full- reference metric (PSNR). Right: Evaluated under no-reference metric (Niqe).

Method

Sharpness Prior

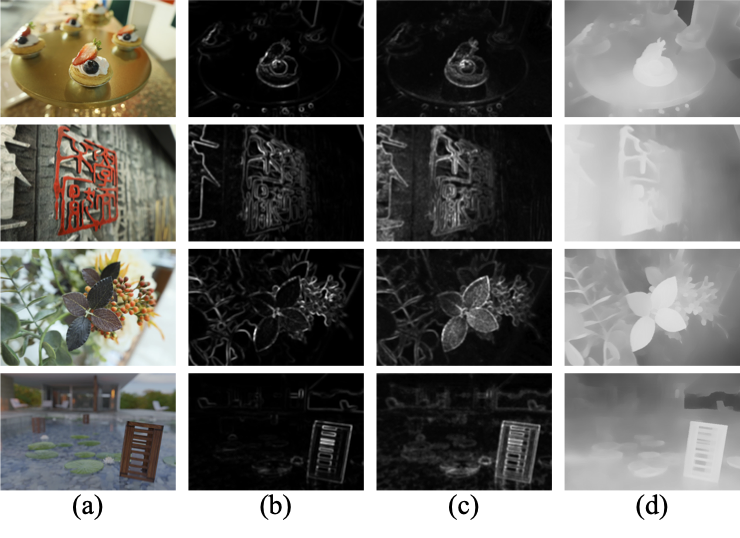

We propose to leverage a sharpness prior to accurately model the deblurring phenomenon for sharp NeRF. By applying this sharpness prior, sharpness level of image pixels is determined. This sharpness information plays a key role in learning the suitable blur kernels for those pixels.

Comparison of sharpness maps provided by various sharpness priors. (a): Sample images, and sharpness maps from (b): Tenengrad, (c): SML, and (d): DMENet.

Grid-based Blur Kernel

Our grid-based blur kernels can be directly optimized to model the spatially-varying blurriness of the given scene, in contrast to previous works that employed MLP to generate blur kernels.

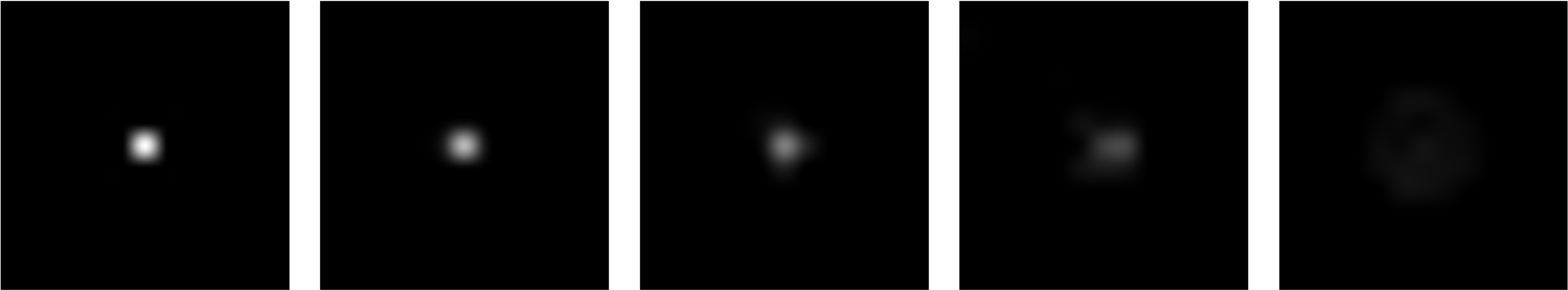

Visualization of blur kernels. From left to right, the values of kernels are widely spread which implies that leftmost kernel is responsible for sharp region and rightmost kernel is responsible for blurry region.

Patch Sampling

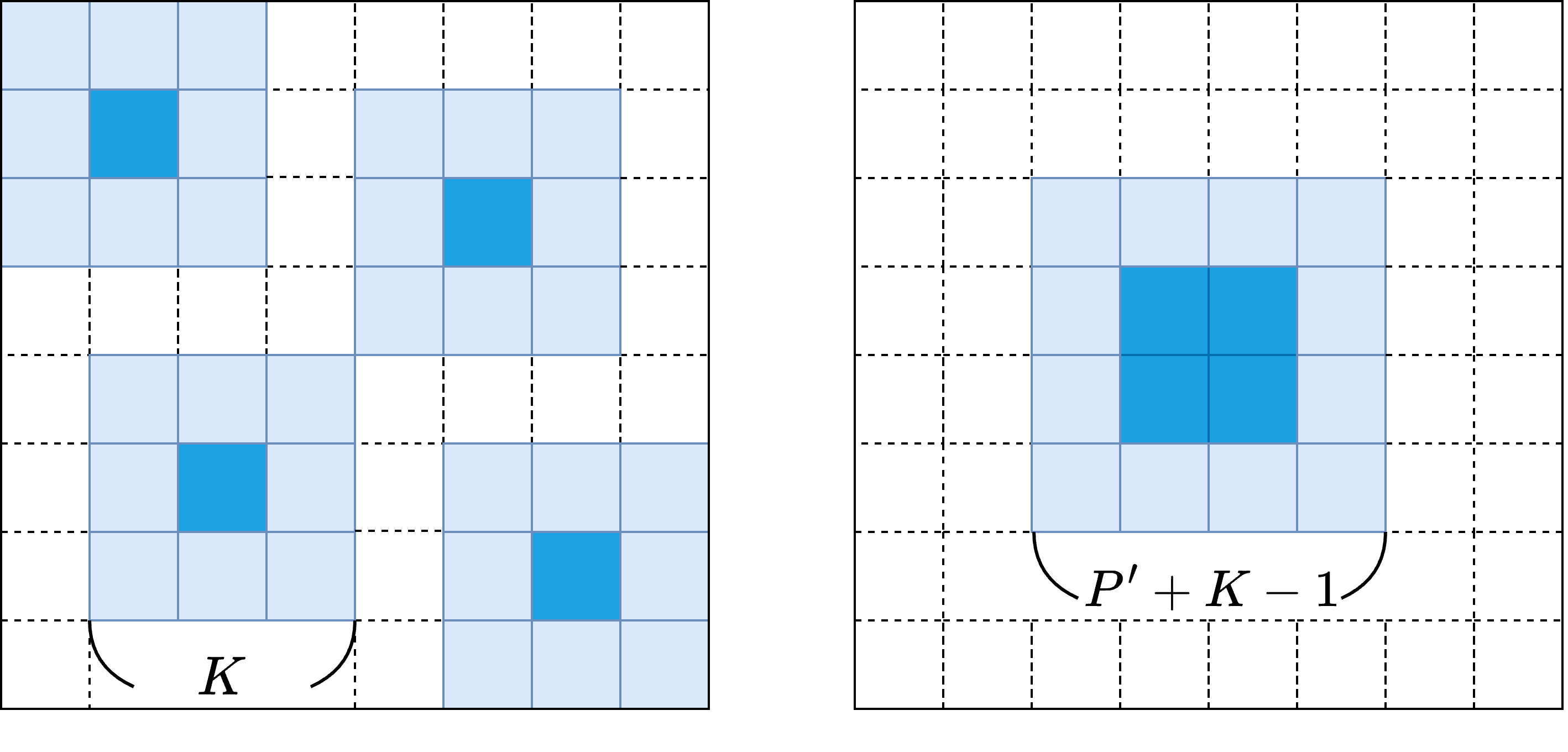

With random patch sampling, we have reduced the number of required neighboring rays per ray from $K^2$ to $\frac{{\left (P'+K+1 \right )}^2}{{P'}^2}$.

Left: random ray sampling. Right: random patch sampling. Blue pixels are P′ × P′ interesting pixels to be rendered and skyblue pixels are required neighboring pixels for blur convolution.

Defocus blur Dataset

Bibtex

We used the project page of Fuzzy Metaballs as a template, and our interactive demo is based on NeRF-Factory.