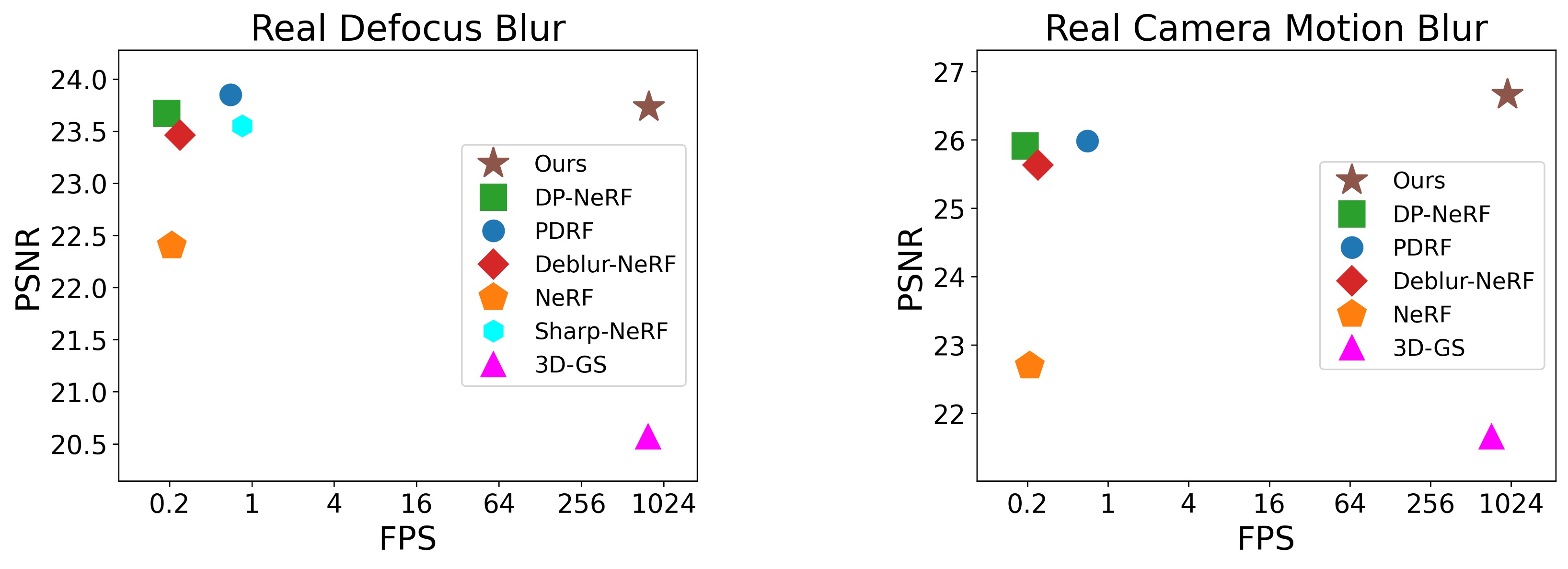

Novel-view synthesis of scenes acquired with several images or videos has been revolutionized by Radiance Field techniques. However, their high visual quality was achieved only with neural networks, which are costly to train and do not provide real-time rendering. Recently, 3D Gaussians splatting-based approach has been proposed to model the 3D scene, and it achieves state-of-the-art visual quality as well as renders in real-time. However, this approach suffers from severe degradation in the rendering quality if the training images are blurry. Several previous studies have attempted to render clean and sharp images from blurry input images using neural fields. However, the majority of those works are designed only for volumetric rendering-based neural fields and are not applicable to rasterization-based approaches. To fill this gap, we propose a novel neural field-based deblurring framework for the recently proposed rasterization-based approaches, 3D Gaussians, and rasterization. Specifically, we employ a small Multi-Layer Perceptron (MLP), which manipulates the covariance of each 3D Gaussian to model the scene blurriness. While deblurring 3D Gaussian Splatting can still enjoy real-time rendering, it can reconstruct fine and sharp details from blurry images. A variety of experiments have been conducted on the benchmark, and the results have revealed the effectiveness of our approach for deblurring.

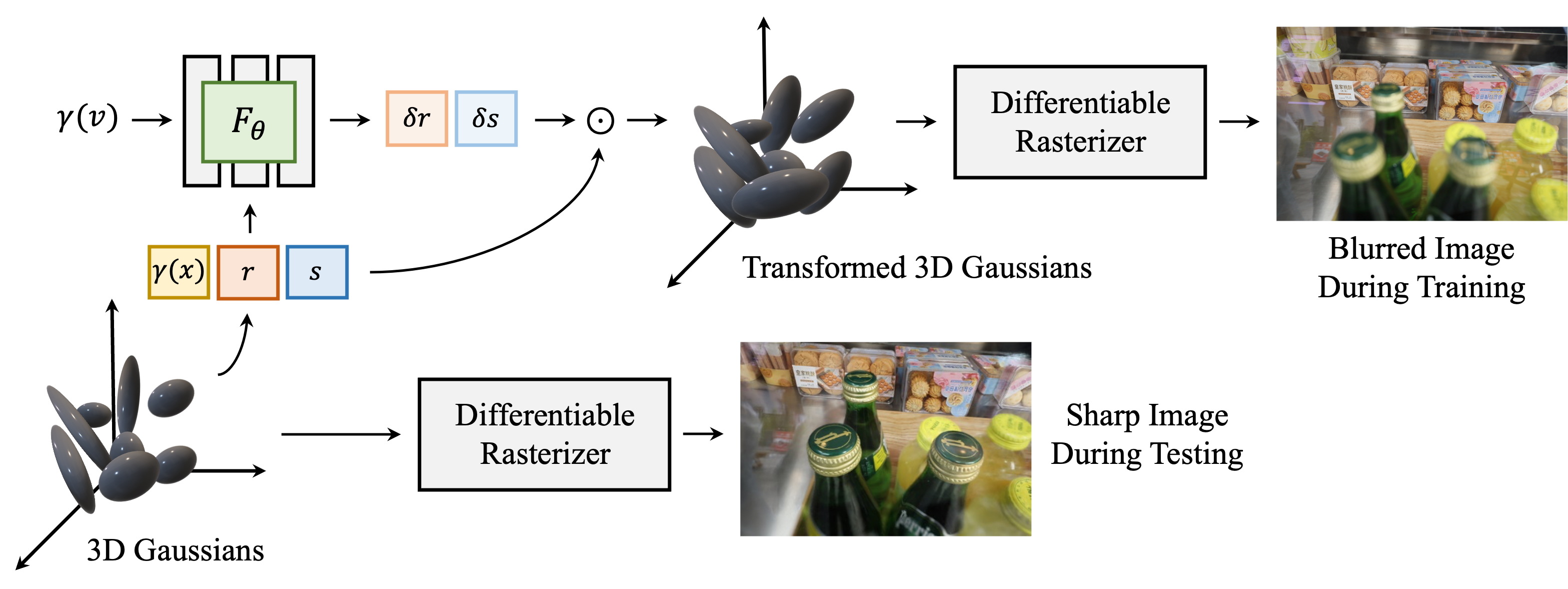

We learn the deblurring by transforming the geometry of the 3D Gaussians. To do so, we have employed an MLP that takes the position, rotation, scale, and viewing direction of 3D Gaussians as inputs, and outputs offsets for rotation and scale. Then these offsets are element-wisely multiplied to rotation and scale, respectively, to obtain the transformed geometry of the 3D Gaussians.

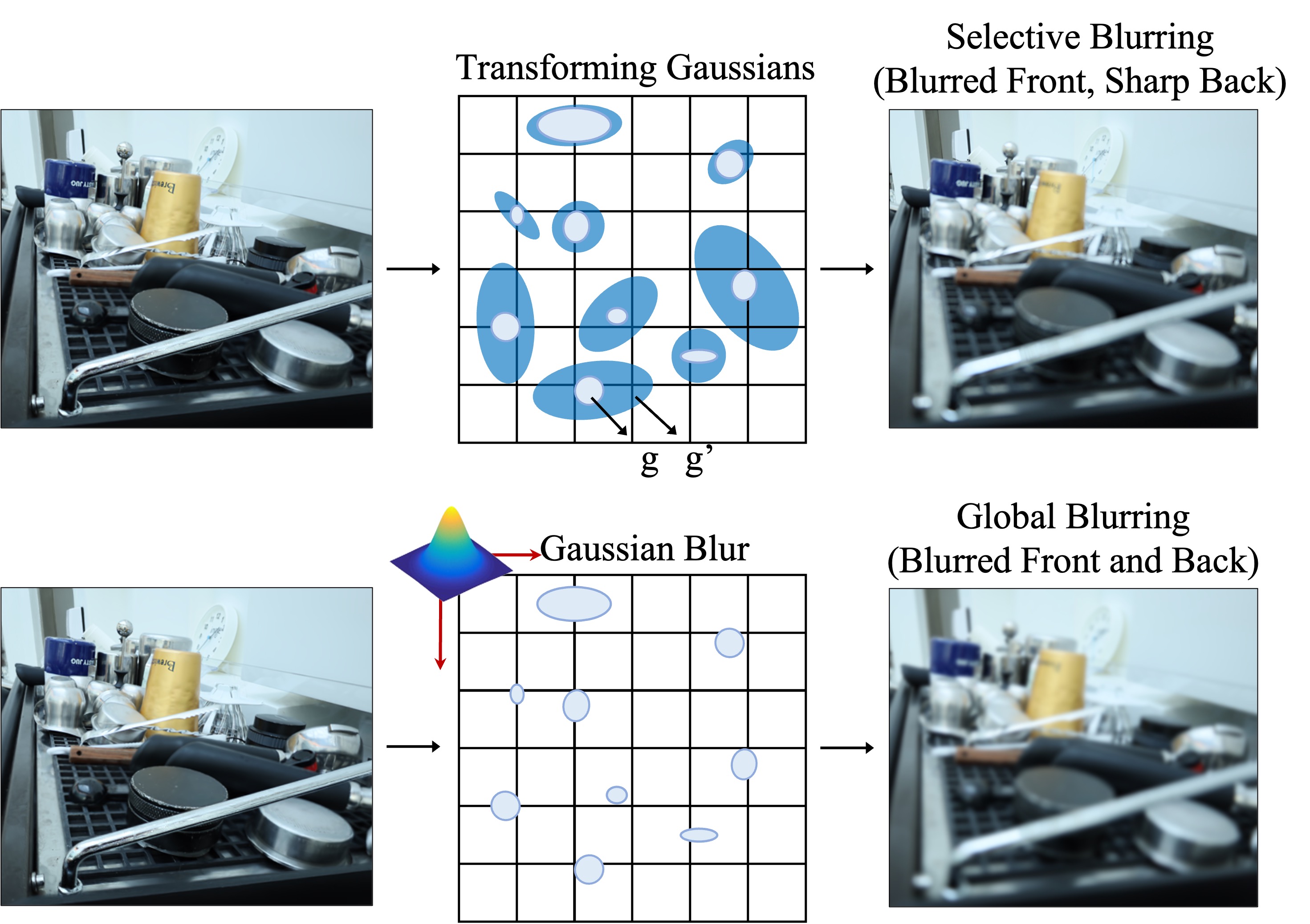

Since we predict the offsets for each Gaussian, we can selectively enlarge the covariances of Gaussians where the parts in the training images are blurred. This flexibility enables us to effectively implement deblurring capability in 3D-GS. On the other hand, a naive approach to blurring the rendered image is simply to apply a Gaussian kernel which is not capable of handling each part of the image differently but blurs the entire image.

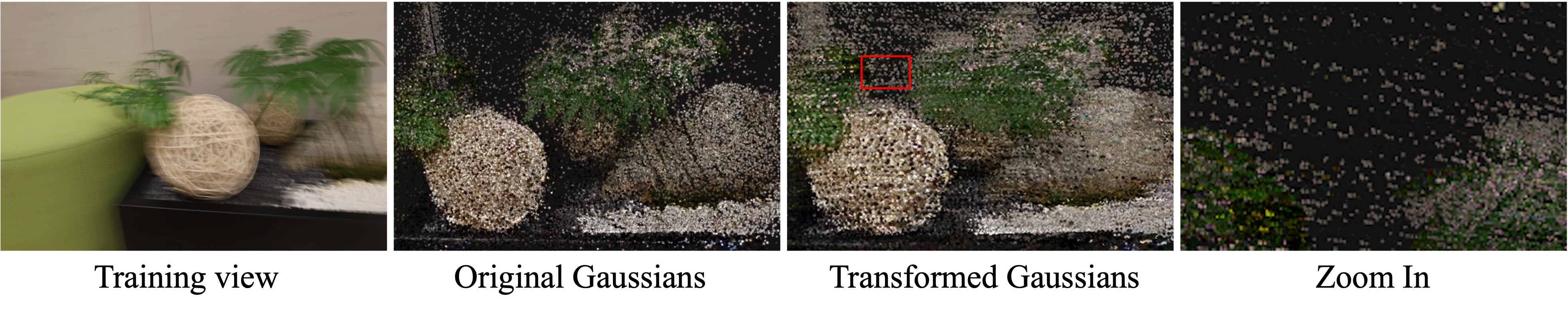

3D-GS's reconstruction quality heavily relies on the initial point cloud which is obtained from structure-from-motion (SfM). However, SfM produces only sparse point clouds if the given images are blurry. Even worse, if the scene has a large depth of field which is prevalent in defocus blurry scenes, SfM hardly extracts any points that lie on the far end of the scene. To make a dense point cloud, we add additional points on the periphery of the existing points using K-Nearest-Neighbor (KNN) algorithm during the training. Furthermore, we prune 3D Gaussians depending on their relative depth. We loosely prune the 3D Gaussians placed on the far edge of the scene to keep more 3D Gaussians on the far plane.

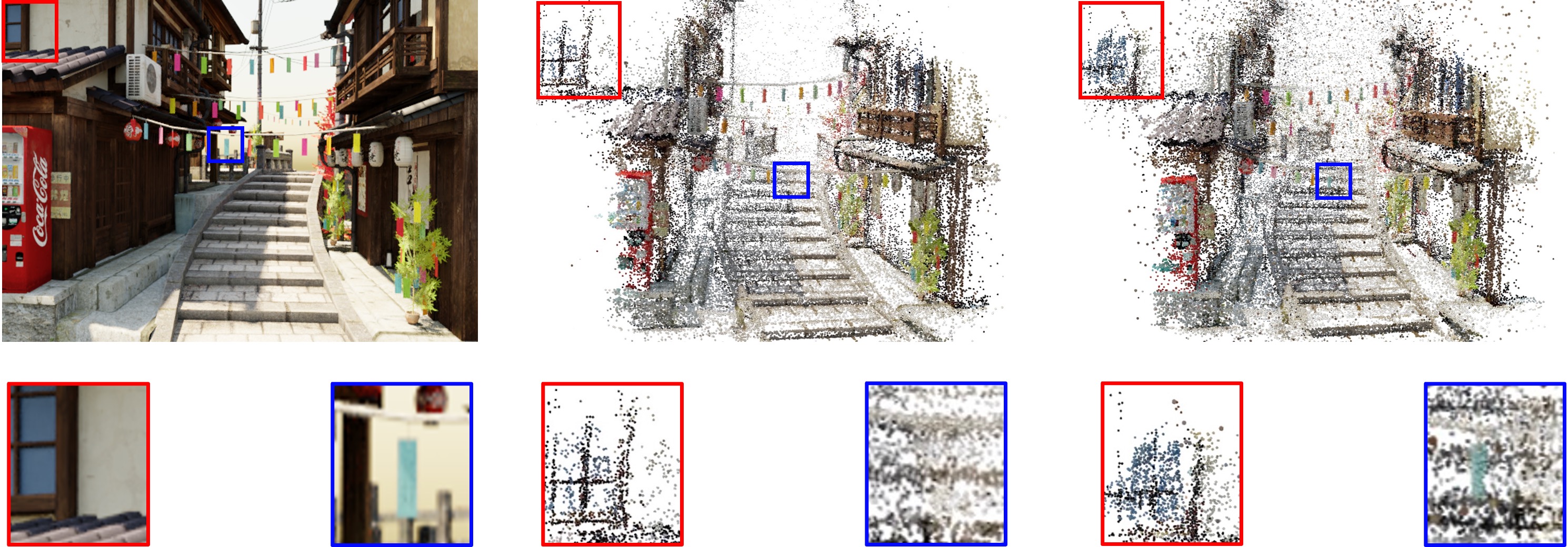

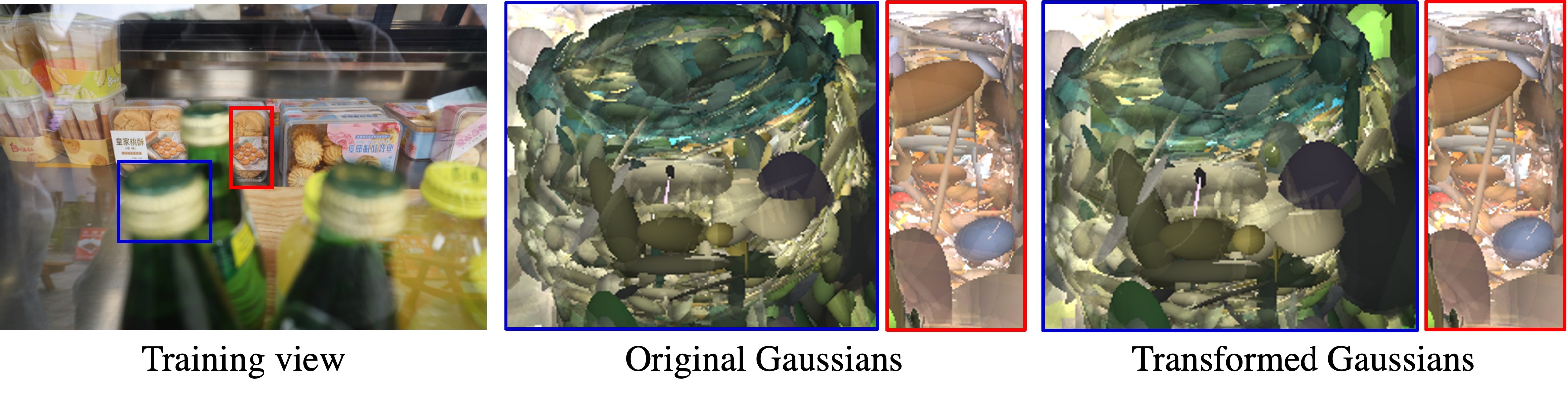

This figure visualizes the original and transformed 3D Gaussians for defocus blur. With a given view whose near plane is defocused, the transformed 3D Gaussians show larger scales than those of the original 3D Gaussians to model defocus blur on the near plane (blur-bordered images). Meanwhile, the transformed 3D Gaussians keep very similar shapes to the original ones for sharp objects in the far plane (red-bordered images).

This figure depicts point clouds of the original 3D Gaussians and transformed 3D Gaussians. The point cloud of the transformed 3D Gaussians exhibits camera movements when the camera moves left to right.

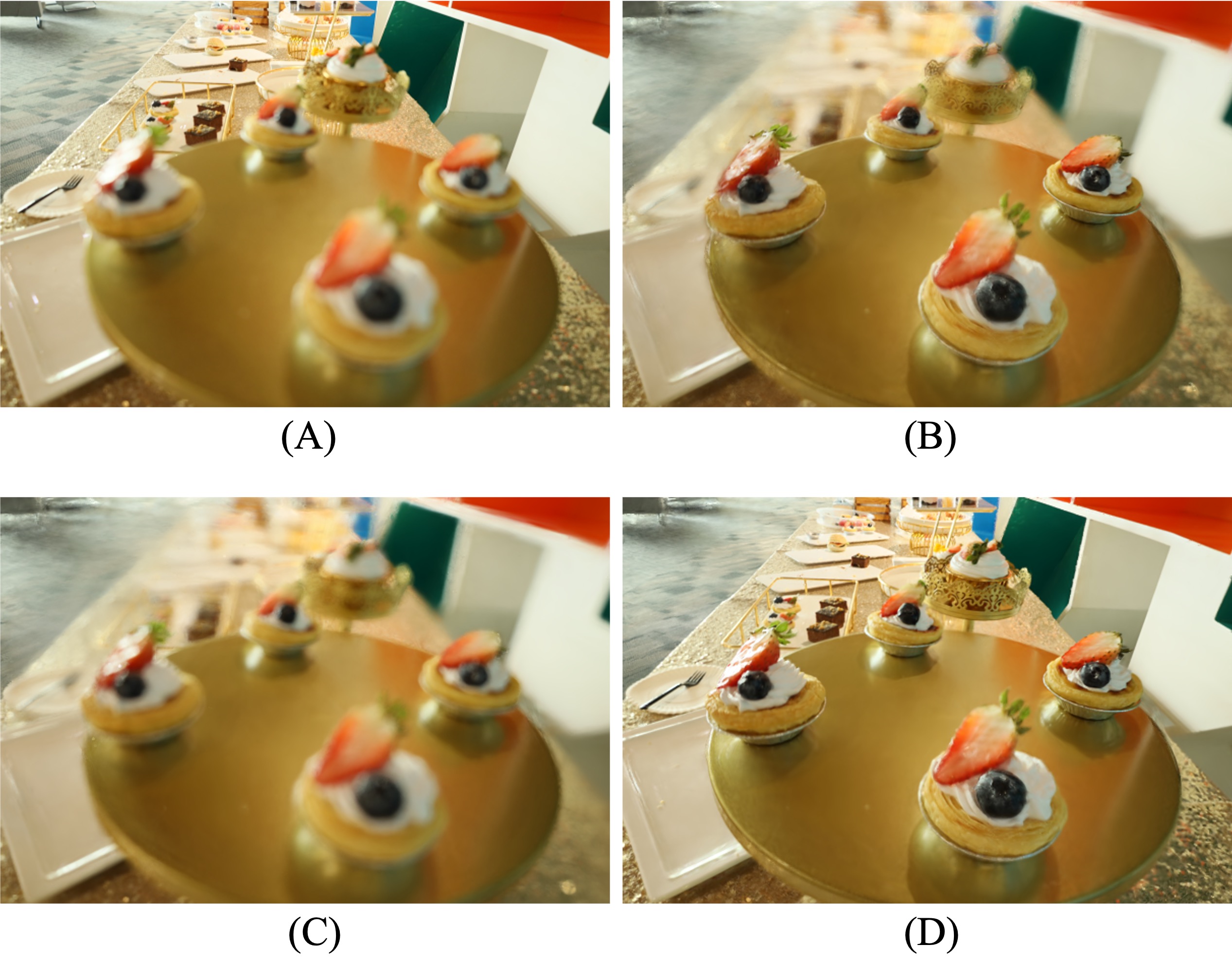

Selective Gaussian blur adjustment. As delineated in this figure, our methodology adeptly harnesses the δrj , δsj both emanating from compact Multi-Layer Perceptrons (MLP), enabling the inversion of Gaussian blur regions or the comprehensive modulation of overall blurriness and sharpness. With the Transformation of δrj , δsj , our framework facilitates the nuanced blurring of proximal regions akin to A, as well as the deft blurring of distant locales akin to B. Furthermore, it offers the capability to manipulate the global blurriness or sharpness, exemplified by adjustments akin to C and D.

@misc{lee2024deblurring,

title={Deblurring 3D Gaussian Splatting},

author={Byeonghyeon Lee and Howoong Lee and Xiangyu Sun and Usman Ali and Eunbyung Park},

year={2024},

eprint={2401.00834},

archivePrefix={arXiv},

primaryClass={cs.CV}

}